Properly adjusted color can have as much effect on video quality as an increase in size. The process of basic color correction is simple; adjust the range of black to white (tonality), and the color balance (hue and saturation) so that the image (pick any of the following)

Digital video is represented in either RGB or in YCbCr (often called YUV) colorspace. A color space is simply a way of representing a color. Both the RGB and the YCbCr colorspace formulas contain three variables, also known as components or channels. RGB's are red, green and blue. YCbCr's are Y = luma (or black and white or lightness) and CbCr = chroma (or color), where Cb = Blue minus 'black and white', and Cr = Red minus 'black and white'.

The figure shows that several RGB colors represent the same YUV color. This implies that a lossless RGB -> YCbCr -> RGB is not possible. (Note that the YCbCr value [16,128,128] is translated to [0,0,0].)

Bit depth describes the number of bits used to encode each color channel of the video signal. 8 bits is the consumer standard. This provides for 256 (= 2^8) shades of a given color. When you adjust a given channel (such as red or luma) of a given pixel, you are simply changing the value within a range of 0-255.

Subsampling is a reference to keeping less detail for the color components in digital Video. Subsampling is only used in the YCbCr colorspace. 4:2:2 subsampling describes keeping color information (CbCr) for only every other pixel, on every line. YUY2 is a PC storage reference to YCbCr 4:2:2. 4:1:1 is keeping color for every 4th pixel on every line. NTSC DV is 4:1:1 YCbCr. 4:2:0 is keeping color for every other pixel on the odd lines, and no color for the pixels on the even lines. MPEG and PAL DV use YCbCr 4:2:0. YV12 is a PC storage reference to 4:2:0 YCbCr.

Subsampling is used to reduce the storage and broadcast bandwidth requirements for digital video. This is effective for a YCbCr signal because the human eye is more sensitive for changes in black and white than for changes in color. So drastically reducing the color info shows very little difference. Composite analogue video signals such as PAL YUV or NTSC YIQ, use a similar technique to reduce analog storage and broadcast requirements.

Although you can capture an analogue source in a few different formats, YUY2 is the most advisable. This is because the analogue source is already in a YCbCr like format (namely YUV for PAL and YIQ for NTSC), and YUY2 provides the least amount of subsampling. You can preform corrections in either the RGB or YUY2 color space. Each provides it's distinct advantages and disadvantages. VirtualDub operates exclusively in RGB. AviSynth can be used to correct video in either RGB or YCbCr (YUY2). Please see this explination of colorspaces for more details, and some excellent links. Especially the discussion of Data Storage.

A good thing to know. If you feed a YUY2 file into VirtualDub or TMPGEnc, it will be converted to RGB before it gets there. This conversion will be done by the codec (like huffyuv or an mjpeg codec) if you open the avi directly. It can be done by an explicit ConvertToRGB32() within AviSynth, or it will be done by your operating system if you open the file in AviSynth and output uncompressed YUY2. The reason you may care is that the luma range may be handled differently depending upon the method of conversion. The standard way of converting is to take the normal range of YUY2 (which is 16-235) and expand it to the normal range of RGB (which is 0-255). Huffyuv, the AviSynth conversion routines, and the default OS conversions do this. The down side of this is that any values outside of the normal range will be set to 0 or 255. This is called clamping. A non-standard method of conversion is to keep the 16-235 range for the RGB. The problem with this is that if you don't deal with this correctly, you will get washed out looking video. The major mjpeg codecs (and some DV ones) do this non-standard YUY2->RGB conversion. TMPGEnc and other mpeg encoders have a setting to adjust for this. In VirtualDub, you need to make the adjustment manually, or simply know what you have before you feed it to your encoder.

References:

Converting

YUV to RGB: doom9 thread about converting from YUV to RGB, what happens with

the luma range when using huffyuv and mjpeg and how to correct for that in

AviSynth, TMPGEnc and CCE.

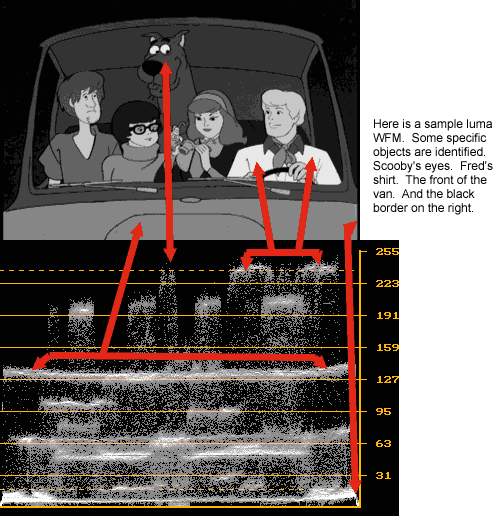

Adjusting colors by the eye is a dificult task. Thankfully there are some tools that graph a video image. These make some adjustments pretty straightforward.

A waveform monitor (wfm) graph, keeps the horizontal (width) position

of every pixel, and replaces its vertical (height) position with the pixels intensity value

(or luma value) (0-255). With this graph, one can

determine the range of intensity values in a given picture, and pinpoint the specific objects that have those

values.

AviSynth has a built in wfm in the histogram function. There are also

two AviSynth plugins that show

a waveform monitor: VideoScope by Randy French and VectorScope by hanfrunz. For VirtualDub, there is clrtools by Trevlac that shows a wfm.

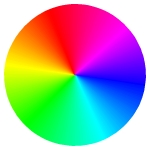

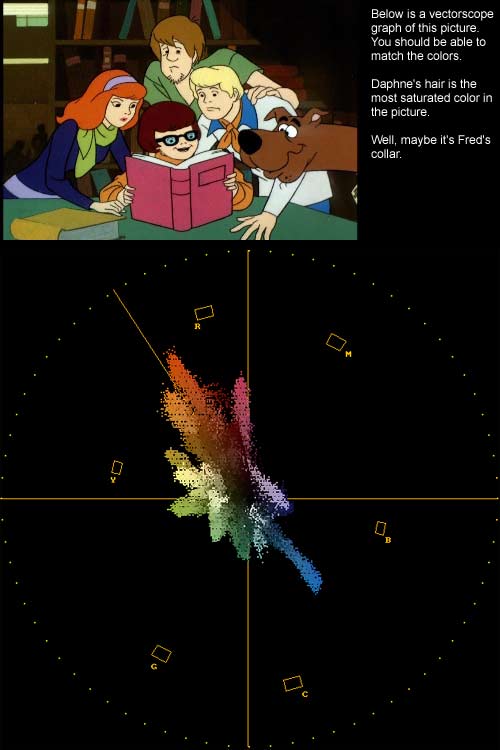

A vector scope graph, shows the color portion of a video picture mapped around a circle. It can be used to determine the hue and saturation of a color. Going clock wise around the circle, red falls at about 11:30, next comes magenta, blue, cyan, green, and yellow. As the hue of a color changes, it moves around the circle.

As the value for a color increases (0-255),

its intensity increases.

A color is more saturated as the amount of the given

color increases versus all other colors. For example, RGB R=200, G=10, B=10 is a 95% saturated red, where as RGB

R=200, G=100, B=100

is a 50% saturated red (better known as pink). Completely desaturated colors

(the greyscale) are

plotted at the center of the vectorscope. As a color's pure (saturated) intensity increases, it moves out toward the edge of the scope.

Color intensity and saturation are intermingled, when it comes to adjustment controls. Thankfully, a vectorscope can be used to simply

see what a control changes. If a color moves towards the edge of the circle, it is

getting more saturated.

As the value for a color increases (0-255),

its intensity increases.

A color is more saturated as the amount of the given

color increases versus all other colors. For example, RGB R=200, G=10, B=10 is a 95% saturated red, where as RGB

R=200, G=100, B=100

is a 50% saturated red (better known as pink). Completely desaturated colors

(the greyscale) are

plotted at the center of the vectorscope. As a color's pure (saturated) intensity increases, it moves out toward the edge of the scope.

Color intensity and saturation are intermingled, when it comes to adjustment controls. Thankfully, a vectorscope can be used to simply

see what a control changes. If a color moves towards the edge of the circle, it is

getting more saturated.

Summarized the hue is the color flavor, the saturation the dominance of the hue in the color and the intensity is the black-white portion. (Note this black-white is not the same as the luminance (Y) of a signal. The latter depends on the intensity and the saturation.)

There is a VectorScope by hanfrunz for AviSynth that shows a vectorscope. For virtualdub, there is clrtools by Trevlac that can show a vectorscope. This can also be used in an AviSynth script. Finally, the built in AviSynth function Histogram(mode="color") is actually a vectorscope display. Unfortunately, it is a bit hard to read, especially for someone unfamiliar to what it shows.

References:

Color

Principles - Hue, Saturation, and Value: About various models of color and

the properties of color and to apply that understanding to appropriate color

selection for visualizations.

ColorFAQ

(by Charles Poynton): This document clarifies aspects of colour

specification and image coding that are important to computer graphics, image

processing, video, and the transfer of digital images to print.

About the meaning of gamma.

About Waveform

Monitors and Vectorscopes.

Back to VirtualDub postprocessing

Back to AviSynth postprocessing

Back to the Index: HOME

Last edited on: 06/12/2004 | First release: n/a |

Author: Trevlac

| Content by doom9.org